Apple has built a reputation on privacy.

With the introduction of Apple Intelligence in iOS 18 and macOS Sequoia, the tech giant claims it’s setting a new standard for AI privacy.

But the burning question is: does Apple’s promise of better privacy stand up to scrutiny?

With the rise of generative AI, privacy has been anything but a given—companies are hoovering up data to train models, putting users' personal information at risk.

But Apple, with its emphasis on privacy, is aiming to flip the narrative.

Local Processing vs. Cloud-Based AI: The Privacy Dilemma

One of Apple’s central arguments for privacy in AI lies in its on-device processing.

Apple Intelligence will perform as many tasks as possible locally, meaning that your data remains on your device and doesn’t need to be sent to the cloud for analysis.

This approach significantly reduces the chances of exposing your data to third-party systems or hackers. In a world where data often flows freely to cloud servers for processing, keeping data local is a crucial security layer.

But here’s the kicker: on-device processing, while more secure, has limitations.

As much as Apple pushes its hardware to handle AI tasks, complex queries—like language model processing—require more computational heft than even the most powerful iPhones can handle.

That’s where Apple’s Private Cloud Compute (PCC) steps in.

PCC is Apple’s solution to privacy concerns in cloud-based AI processing.

Instead of relying on standard cloud architecture, Apple designed custom-built servers running on Apple silicon and a unique operating system to ensure maximum security.

PCC doesn’t just stop at encrypting data in transit; it also doesn’t store data long-term, ensuring that all information is irretrievably wiped after a server is rebooted.

This "start fresh" method means Apple Intelligence is designed to forget your data as quickly as it processes it.

However, while the technical guarantees Apple claims are undoubtedly more robust than policy-based promises that other companies often rely on, it’s hard to ignore that data still has to leave your device.

Yes, it’s encrypted end-to-end, but the moment it enters the cloud, it's vulnerable to breaches, no matter how secure the system claims to be.

The Balancing Act: Innovation vs. Privacy

Apple’s grand vision is that privacy should be “technically enforceable,” not just managed by trust policies.

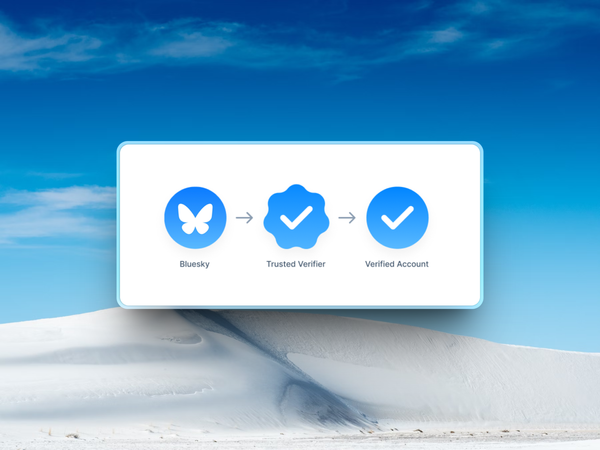

In theory, this means that it’s almost impossible for Apple or any rogue entity to access your data. The measures they’ve taken—like preventing privileged access, limiting remote management, and including external auditing for transparency—are reassuring steps.

Still, relying on external auditing to validate these claims introduces a layer of trust that tech companies haven’t always earned. Apple’s notorious secrecy makes its moves into AI security even more significant.

It’s almost as if Apple is saying, "Trust us—because you can check for yourself." But with security researchers still in the early stages of evaluating PCC, only time will tell if this transparency holds.

Where Apple’s privacy narrative faces real scrutiny is in how it plans to manage partnerships with AI giants like OpenAI.

While Apple boasts that it will process most queries locally, integrating OpenAI's ChatGPT adds complexity.

Apple has taken the extra step of allowing users to opt-in and manually approve every time their data needs to be sent to OpenAI. But even with this safeguard, it opens a door that goes against Apple’s closed-system reputation.

Generative AI thrives on data—more data means better models and better models mean better user experience.

But at what cost to privacy? Apple’s biggest challenge will be balancing its AI ambitions with the privacy standards it’s built its brand on.

Can it continue to keep user data safe while competing with the likes of Google and Microsoft in AI innovation?

The Verdict: A New Potential Privacy Standard, With Caveats

Apple Intelligence is undoubtedly a bold attempt to marry AI innovation with privacy—two elements that have been at odds throughout the generative AI boom.

Its focus on on-device processing and the unique security architecture of PCC shows that Apple is serious about its privacy-first promises.

However, it's hard to ignore that Apple is still a participant in the very same cloud-based AI infrastructure that poses risks for users everywhere.

Apple Intelligence represents a thoughtful approach to AI privacy, but whether it delivers a truly airtight solution will depend on how well it can safeguard data in practice.

For now, Apple’s moves are ambitious, but its privacy promises will need to be battle-tested in the real world. Only then can we determine whether this is the future of AI privacy—or just another promise in a long line of privacy pledges.